What is GATE/0 ?

Introduction

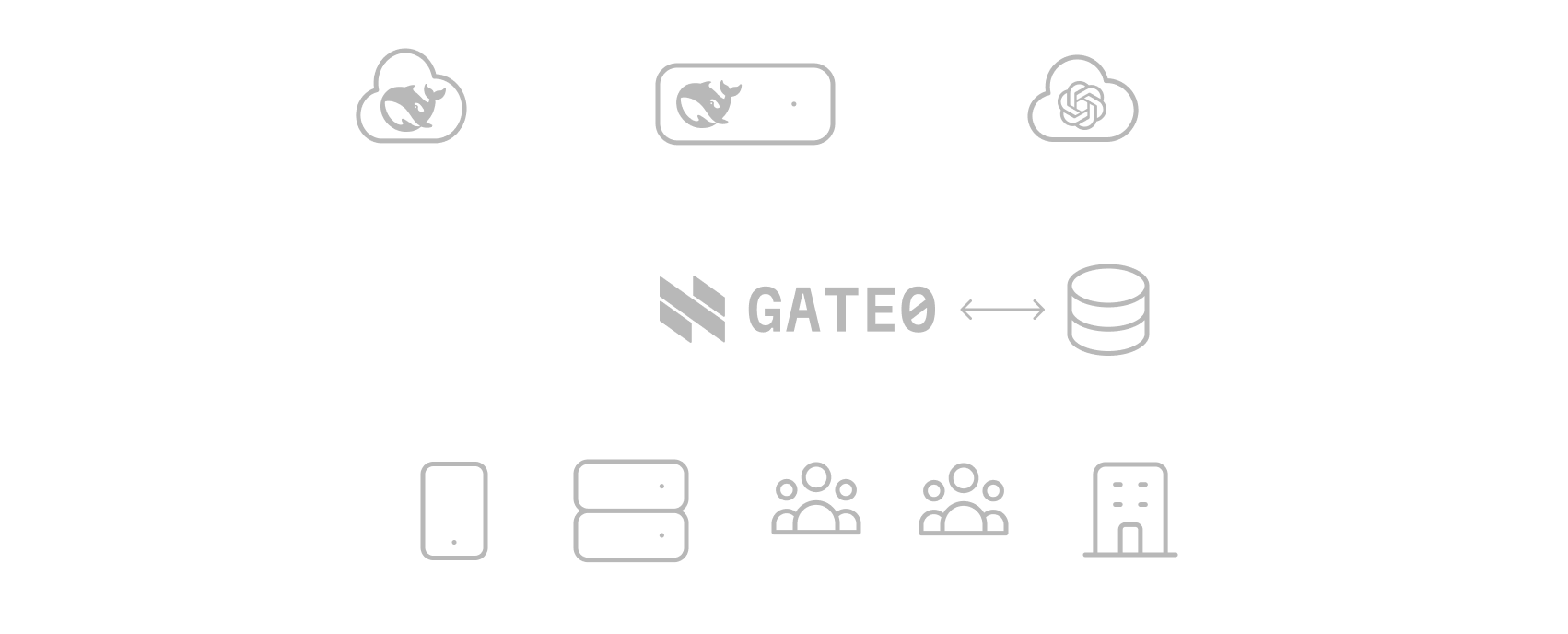

GATE/0 is an intelligent LLM proxy that enables teams to monitor, analyze, and forecast language model usage and costs. With detailed analytics, real-time tracking, and predictive insights, GATE/0 helps optimize performance and budget across all your LLM workflows.

GATE/0 exposes a compatible OpenAI REST API, allowing you to enrich requests with custom labels. This makes it easy to allocate costs and track usage by project, team, customer, or any other dimension that matters to your organization—without changing your existing codebase.

How It Works

Get started with GATE/0 in less than 2 minutes. Here's how:

import OpenAI from 'openai';

const openai = new OpenAI({

baseURL: "https://gateway.gate.io/v1", // GATE0 cloud proxy URL

apiKey: process.env.GATE0_API_KEY, // API key provided by GATE0

});

const chatCompletion = await openai.chat.completions.create({

model: "openai/gpt-4o", // Model name with provider prefix

messages: [{ role: 'user', content: "Hello, how are you?" }],

metadata: { // Custom labels

"project":"alpha",

"client":"frontend",

"env":"prod"

}

});

console.log(chatCompletion.choices);from openai import OpenAI

import os

client = OpenAI(

base_url="https://gateway.gate.io/v1", # GATE0 cloud proxy URL

api_key=os.environ.get("GATE0_API_KEY"), # API key provided by GATE0

)

completion = client.chat.completions.create(

model="openai/gpt-4o", # Model name with provider prefix

messages=[

{"role": "user", "content": "Hello, how are you?"}

],

extra_body={

"metadata": { # Custom labels

"project":"alpha",

"client":"frontend",

"env":"prod"

}

}

)

print(completion.choices[0].message)from langchain_openai import ChatOpenAI

import os

chat = ChatOpenAI(

openai_api_base="https://gateway.gate.io/v1", # GATE0 cloud proxy URL

openai_api_key=os.environ.get("GATE0_API_KEY"), # API key provided by GATE0

model="openai/gpt-4o", # Model name with provider prefix

default_headers={ # Custom labels

"x-gate0-label-project": "alpha-1",

"x-gate0-label-client": "frontend",

"x-gate0-label-env": "prod"

}

)

response = chat.invoke("Hello, how are you?")

print(response.content)As you can see in the example above, you can continue using your preferred AI library — the only changes you'll need to make are:

- Base URL: Replace it with https://gateway.gate0.io/v1

- API Key: Use the API key provided by GATE/0 instead of your original one

- Model Name: Specify the model with a provider prefix. For example, use

openai/gpt-4oinstead ofgpt-4o - Labels (Optional): Add custom labels to allocate and track costs more effectively